This article will provide you with complete root cause analysis and solution of a java.util.HashMap infinite loop problem affecting an Oracle OSB 11g environment running on IBM JRE 1.6 JVM.

This case study will also demonstrate how you can combine AIX ps –mp command and Thread Dump analysis to pinpoint you top CPU contributor Threads within your Java VM(s). It will also demonstrate how dangerous using a non Thread safe HashMap data structure can be within a multi Thread environment / Java EE container.

Environment specifications

- Java EE server: Oracle Service Bus 11g

- Middleware OS: AIX 6.1

- Java VM: IBM JRE 1.6 SR9 – 64-bit

- Platform type: Service Bus

Monitoring and troubleshooting tools

- AIX nmon & topas (CPU monitoring)

- AIX ps –mp (CPU and Thread breakdown OS command)

- IBM JVM Java core / Thread Dump (thread analysis and ps –mp data corrleation)

Problem overview

- Problem type: Very High CPU observed from our production environment

A high CPU problem was observed from AIX nmon monitoring hosting a Weblogic Oracle Service Bus 11g middleware environment.

Gathering and validation of facts

As usual, a Java EE problem investigation requires gathering of technical and non-technical facts so we can either derived other facts and/or conclude on the root cause. Before applying a corrective measure, the facts below were verified in order to conclude on the root cause:

· What is the client impact? HIGH

· Recent change of the affected platform? Yes, platform was recently migrated from Oracle ALSB 2.6 (Solaris & HotSpot 1.5) to Oracle OSB 11g (AIX OS & IBM JRE 1.6)

· Any recent traffic increase to the affected platform? No

· How does this high CPU manifest itself? A sudden CPU increase was observed and is not going down; even after load goes down e.g. near zero level.

· Did an Oracle OSB recycle resolve the problem? Yes, but problem is returning after few hours or few days (unpredictable pattern)

- Conclusion #1: The high CPU problem appears to be intermittent vs. pure correlation with load

- Conclusion #2: Since high CPU remains after load goes down, this indicates either JVM threshold are triggered along with point of non-return and / or the presence of some hang or infinite looping Threads

AIX CPU analysis

AIX nmon & topas OS command were used to monitor the CPU utilization of the system and Java process. The CPU utilization was confirmed to go up as high as 100% utilization (saturation level).

Such high CPU level did remain very high until the JVM was recycled.

AIX CPU Java Thread breakdown analysis

One of the best troubleshooting approaches to deal with this type of issue is to generate an AIX ps –mp snapshot combined with Thread Dump. This was achieved by executing the command below:

ps -mp <Java PID> -o THREAD

Then immediately execute:

kill -3 <Java PID>

** This will generate a IBM JRE Thread Dump / Java core file (javacorexyz..) **

The AIX ps –mp command output was generated as per below:

USER PID PPID TID ST CP PRI SC WCHAN F TT BND COMMAND

user 12910772 9896052 - A 97 60 98 * 342001 - - /usr/java6_64/bin/java -Dweblogic.Nam

- - - 6684735 S 0 60 1 f1000f0a10006640 8410400 - - -

- - - 6815801 Z 0 77 1 - c00001 - - -

- - - 6881341 Z 0 110 1 - c00001 - - -

- - - 6946899 S 0 82 1 f1000f0a10006a40 8410400 - - -

- - - 8585337 S 0 82 1 f1000f0a10008340 8410400 - - -

- - - 9502781 S 2 82 1 f1000f0a10009140 8410400 - - -

- - - 10485775 S 0 82 1 f1000f0a1000a040 8410400 - - -

- - - 10813677 S 0 82 1 f1000f0a1000a540 8410400 -

- - - 21299315 S 95 62 1 f1000a01001d0598 410400 - - -

- - - 25493513 S 0 82 1 f1000f0a10018540 8410400 - - -

- - - 25690227 S 0 86 1 f1000f0a10018840 8410400 - - -

- - - 25755895 S 0 82 1 f1000f0a10018940 8410400 - - -

- - - 26673327 S 2 82 1 f1000f0a10019740 8410400 -

As you can see in the above snapshot, 1 primary culprit Thread Id (21299315) was found taking ~95% of the entire CPU.

Thread Dump analysis and PRSTAT correlation

Once the primary culprit Thread was identified, the next step was to correlate this data with the Thread Dump data and identify the source / culprit at the code level.

But first, we had to convert the decimal format to HEXA format since IBM JRE Thread Dump native Thread Id’s are printed in HEXA format.

Culprit Thread Id 21299315 >> 0x1450073 (HEXA format)

A quick search within the generated Thread Dump file did reveal the culprit Thread as per below.

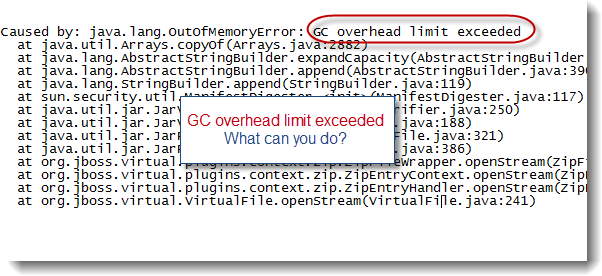

Weblogic ExecuteThread #97 Stack Trace can be found below:

3XMTHREADINFO "[STUCK] ExecuteThread: '97' for queue: 'weblogic.kernel.Default (self-tuning)'" J9VMThread:0x00000001333FFF00, j9thread_t:0x0000000117C00020, java/lang/Thread:0x0700000043184480, state:CW, prio=1

3XMTHREADINFO1 (native thread ID:0x1450073, native priority:0x1, native policy:UNKNOWN)

3XMTHREADINFO3 Java callstack:

4XESTACKTRACE at java/util/HashMap.findNonNullKeyEntry(HashMap.java:528(Compiled Code))

4XESTACKTRACE at java/util/HashMap.putImpl(HashMap.java:624(Compiled Code))

4XESTACKTRACE at java/util/HashMap.put(HashMap.java:607(Compiled Code))

4XESTACKTRACE at weblogic/socket/utils/RegexpPool.add(RegexpPool.java:20(Compiled Code))

4XESTACKTRACE at weblogic/net/http/HttpClient.resetProperties(HttpClient.java:129(Compiled Code))

4XESTACKTRACE at weblogic/net/http/HttpClient.openServer(HttpClient.java:374(Compiled Code))

4XESTACKTRACE at weblogic/net/http/HttpClient.New(HttpClient.java:252(Compiled Code))

4XESTACKTRACE at weblogic/net/http/HttpURLConnection.connect(HttpURLConnection.java:189(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/http/HttpOutboundMessageContext.send(HttpOutboundMessageContext.java(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/http/wls/HttpTransportProvider.sendMessageAsync(HttpTransportProvider.java(Compiled Code))

4XESTACKTRACE at sun/reflect/GeneratedMethodAccessor2587.invoke(Bytecode PC:58(Compiled Code))

4XESTACKTRACE at sun/reflect/DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:37(Compiled Code))

4XESTACKTRACE at java/lang/reflect/Method.invoke(Method.java:589(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/Util$1.invoke(Util.java(Compiled Code))

4XESTACKTRACE at $Proxy115.sendMessageAsync(Bytecode PC:26(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/LoadBalanceFailoverListener.sendMessageAsync(LoadBalanceFailoverListener.java:141(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/LoadBalanceFailoverListener.onError(LoadBalanceFailoverListener.java(Compiled Code))

4XESTACKTRACE at com/bea/wli/sb/transports/http/wls/HttpOutboundMessageContextWls$RetrieveHttpResponseWork.handleResponse(HttpOutboundMessageContextWls.java(Compiled Code))

4XESTACKTRACE at weblogic/net/http/AsyncResponseHandler$MuxableSocketHTTPAsyncResponse$RunnableCallback.run(AsyncResponseHandler.java:531(Compiled Code))

4XESTACKTRACE at weblogic/work/ContextWrap.run(ContextWrap.java:41(Compiled Code))

4XESTACKTRACE at weblogic/work/SelfTuningWorkManagerImpl$WorkAdapterImpl.run(SelfTuningWorkManagerImpl.java:528(Compiled Code))

4XESTACKTRACE at weblogic/work/ExecuteThread.execute(ExecuteThread.java:203(Compiled Code))

4XESTACKTRACE at weblogic/work/ExecuteThread.run(ExecuteThread.java:171(Compiled Code))

Thread Dump analysis – HashMap infinite loop condition!

As you can see from the above Thread Stack Trace of Thread #97, the Thread is currently stuck in an infinite loop / Thread race condition over a java.util.HashMap object (IBM JRE implementation).

This finding was quite interesting given this HashMap is actually created / own by the Weblogic 11g kernel code itself >> weblogic/socket/utils/RegexpPool

Root cause: non Thread safe HashMap in Weblogic 11g (10.3.5.0) code!

Following this finding and data gathering exercise, our team created a SR with Oracle support which did confirm this defect within the Weblogic 11g code base.

As you may already know, usage of non Thread safe / non synchronized HashMap under concurrent Threads condition is very dangerous and can easily lead to internal HashMap index corruption and / or infinite looping. This is also a golden rule for any middleware software such as Oracle Weblogic, IBM WAS, Red Hat JBoss which rely heavily on HashMap data structures from various Java EE and caching services.

The most common solution is to use the ConcurrentHashMap data structure which is designed for that type of concurrent Thread execution context.

Solution

Since this problem was also affecting other Oracle Weblogic 11g customers, Oracle support was quite fast providing us with a patch for our target WLS 11g version. Please find the patch description and detail:

Content:

========

This patch contains Smart Update patch AHNT for WebLogic Server 10.3.5.0

Description:

============

HIGH CPU USAGE AT HASHMAP.PUT() IN REGEXPPOOL.ADD()

Patch Installation Instructions:

================================

- copy content of this zip file with the exception of README file to your SmartUpdate cache directory (MW_HOME/utils/bsu/cache_dir by default)

- apply patch using Smart Update utility

Conclusion

I hope this case study has helped you understand how to pinpoint culprit of high CPU Threads at the code level when using AIX & IBM JRE and the importance of proper Thread safe data structure for high concurrent Thread / processing applications.

Please don’t hesitate to post any comment or question.