java.lang.OutOfMemoryError: Java heap problem is one of the most complex problems you can face when supporting or developing complex Java EE applications.

This article will provide you with a description of this JVM HotSpot OutOfMemoryError error message and how you should attack this problem until its resolution.

For a quick help guide on how to determine which type of OutOfMemoryError you are dealing with, please consult the related posts found in this Blog. You will also find tutorials on how to analyze a JVM Heap Dump and identify potential memory leaks. A troubleshooting guide video for beginners is also available from our YouTube channel.

java.lang.OutOfMemoryError: Java heap space – what is it?

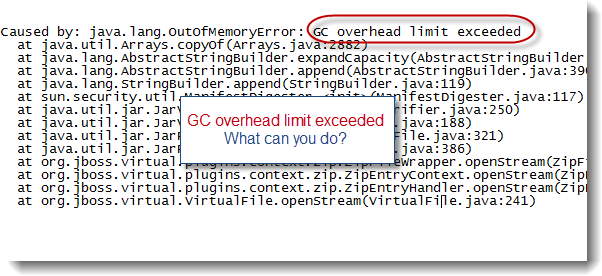

This error message is typically what you will see your middleware server logs (Weblogic, WAS, JBoss etc.) following a JVM OutOfMemoryError condition:

· It is generated from the actual Java HotSpot VM native code

· It is triggered as a result of Java Heap (Young Gen / Old Gen spaces) memory allocation failure (due to Java Heap exhaustion)

Find below a code snippet from the OpenJDK project source code exposing the JVM HotSpot implementation. The code is showing which condition is triggering the OutOfMemoryError: Java heap space condition.

# collectedHeap.inline.hpp

I strongly recommend that you download the HotSpot VM source code from OpenJDK yourself for your own benefit and future reference.

Ok, so my application Java Heap is exhausted…how can I monitor and track my application Java Heap?

The simplest way to properly monitor and track the memory footprint of your Java Heap spaces (Young Gen & Old Gen spaces) is to enable verbose GC from your HotSpot VM. Please simply add the following parameters within your JVM start-up arguments:

-verbose:gc –XX:+PrintGCDetails –XX:+PrintGCTimeStamps –Xloggc:<app path>/gc.log

You can also follow this tutorial which will help you understand how to read and analyze your HotSpot Java Heap footprint.

Ok thanks, now I can see that I have a big Java Heap memory problem…but how can I fix it?

There are multiple scenarios which can lead to Java Heap depletion such as:

· Java Heap space too small vs. your application traffic & footprint

· Java Heap memory leak (OldGen space slowly growing over time…)

· Sudden and / or rogue Thread(s) consuming large amount of memory in short amount of time etc.

Find below a list of high level steps you can follow in order to further troubleshoot:

· If not done already, enabled verbose GC >> -verbose:gc

· Analyze the verbose GC output and determine the memory footprint of the Java Heap for each of the Java Heap spaces (YoungGen & OldGen)

· Analyze the verbose GC output or use a tool like JConsole to determine if your Java Heap is leaking over time. This can be observed via monitoring of the HotSpot old gen space.

· Monitor your middleware Threads and generate JVM Thread Dumps on a regular basis, especially when a sudden increase of Java Heap utilization is observed. Thread Dump analysis will allow you to pinpoint potential long running Thread(s) allocating a lot of objects on your Java Heap in a short amount of time; if any

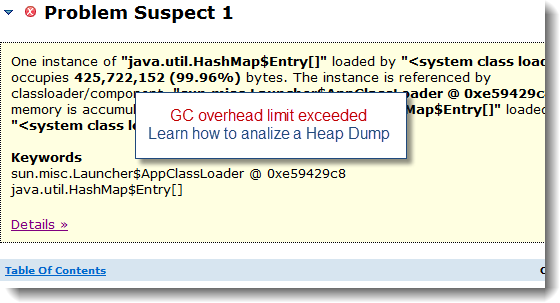

· Add the following parameter within your JVM start-up arguments: -XX:HeapDumpOnOutOfMemoryError This will allow your HotSpot VM to generate a binary Heap Dump (HPROF) format. A binary Heap Dump is a critical data allowing to analyze your application memory footprint and / or source(s) of Java Heap memory leak

From a resolution perspective, my recommendation to you is to analyze your Java Heap memory footprint using the generated Heap Dump. The binary Heap Dump (HPROF format) can be analyzed using the free Memory Analyzer tool (MAT). This will allow you to understand your java application footprint and / or pinpoint source(s) of possible memory leak. Once you have a clear picture of the situation, you will be able to resolve your problem by increasing your Java Heap capacity (via –Xms & Xmx arguments) or reducing your application memory footprint and / or eliminating the memory leaks from your application code. Please note that memory leaks are often found in middleware server code and JDK as well.

I tried everything I could but I am still struggling to pinpoint the source of my OutOfMemoryError

Don’t worry, simply post a comment / question at the end of this article or email me directly @phcharbonneau@hotmail.com. I currently offer free IT / Java EE consulting. Please provide me with your problem description along you’re your generated data such as a download link to your Heap Dump, Thread Dump data, server logs etc. and I will analyze your problem for you.