This case study will provide you with background on this type of problem and demonstrates how you can improve your performance for some of your SQL queries by tuning the Oracle JDBC driver defaultRowPrefetch value.

Environment specifications (case study)

· Java EE server: Oracle Weblogic Server 10.3.3

· Hardware: HP DL360 – 2 x Intel Xeon Processor x5670 (6 cores) @2.93 GHz & 72 GB RAM

· OS: 64-bit Red Hat Enterprise Linux (RHEL) version 5.5

· JDK: Sun 64-bit JDK/JRE 1.6.0_11+

· RDBMS: Oracle 10gR2

· Database Persistence API: Oracle Toplink version 11.1.1.3.0

· Platform type: Middle tier platform

Monitoring and troubleshooting tools

- UNIX / Linux traceroute command

- Oracle AWR Report (to access health of the database and SQL response time)

Problem overview

- · Problem type: Oracle JDBC driver Socket read hang and slower SQL response time from one regional location of our Weblogic application server

Higher response time was observed from one of one Weblogic application server location. Our environment is built as per below:

- The application is deployed across 2 different regional locations (region #1 & region #2) with one Weblogic cluster deployed in each region

- The primary Oracle database server is deployed to the region #1

- The cold standby Oracle database (used for fail-over only) is deployed to the region #2

- The Weblogic cluster #1 is deployed to the region #1

- The Weblogic cluster #2 is deployed to the region #2

Major slowdown was observed for SQL transactions executed from region #2 (cross regional communication with the database).

Gathering and validation of facts

As usual, a Java EE problem investigation requires gathering of technical and non technical facts so we can either derived other facts and/or conclude on the root cause. Before applying a corrective measure, the facts below were verified in order to conclude on the root cause:

· What is the client impact? MEDIUM (higher response time observed in an intermittent manner; depending on which region the user is hitting)

· Is slowdown affecting all transactions? No, slowdown was isolated to transactions executed from Weblogic cluster #2; involved in a cross regional communication with our Oracle database

· Did DBA investigate the health of the database and SQL performance? Yes, no problem was found with the database and no slow running SQL was found from the Oracle AWR report

- Conclusion #1: The problem appear to be isolated to transactions executed from Weblogic cluster #2 which is communication with the primary Oracle database server located in the region #1. The decision was then taken to assess any network latency problem between region #1 and region #2

Network latency analysis

Network latency between the Weblogic clusters and the Oracle database server was measured at high level via the traceroute command.

Latency between Weblogic cluster #1 (region #1) and database server (region #1)

bash-2.05$ traceroute <server hostname>

traceroute: Warning: Multiple interfaces found; using xyz.xyz.xyz.xyz @ eri0

traceroute to <server hostname> (xyz.xyz.xyz.xyz), 30 hops max, 40 byte packets

1 lt;server hostname> (xyz.xyz.xyz.xyz) 0.228 ms 0.151 ms 0.335 ms

** Latency of ~0.3 ms with no HOP in between **

Latency between Weblogic cluster #2 (region #2) and database server (region #1)

$ traceroute <db server hostname>

traceroute: Warning: Multiple interfaces found; using xyz.xyz.xyz.xyz @ eri0

traceroute to <db server hostname> (xyz.xyz.xyz.xyz), 30 hops max, 40 byte packets

1 <router hostname> (xyz.xyz.xyz.xyz) 1.854 ms 1.786 ms 1.460 ms

2 <router hostname> (xyz.xyz.xyz.xyz) 0.298 ms 0.284 ms 0.322 ms

3 <router hostname> (xyz.xyz.xyz.xyz) 0.648 ms 0.389 ms 0.414 ms

4 <router hostname> (xyz.xyz.xyz.xyz) 0.294 ms 0.562 ms 0.330 ms

5 <router hostname> (xyz.xyz.xyz.xyz) 0.695 ms 0.837 ms 0.695 ms

6 <router hostname> (xyz.xyz.xyz.xyz) 10.180 ms 10.173 ms 10.039 ms

7 <router hostname> (xyz.xyz.xyz.xyz) 20.091 ms 19.767 ms 20.157 ms

8 <router hostname> (xyz.xyz.xyz.xyz) 9.732 ms 9.908 ms 9.464 ms

9 <db server hostname> xyz.xyz.xyz.xyz 9.814 ms 10.238 ms 9.765 ms

** Latency of ~50 ms with 8 HOPS (router, switch etc.) in between **

Graphical view of the network latency between region #1 and region #2

- Conclusion #2: A higher network latency was confirmed between Weblogic cluster #2 and database server (region #1). Now question was to determine why such latency was causing so much impact on the SQL response time

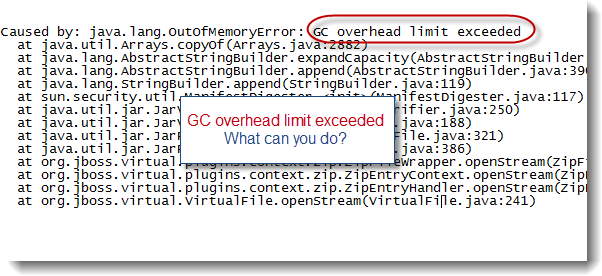

Thread Dump analysis

A few Thread Dump snapshot were taken from Weblogic cluster #2 which did reveal the following bottleneck:

ExecuteThread: '61' for queue: 'weblogic.kernel.Default'" daemon prio=5 tid=0x00970d58 nid=0x51 runnable [a8b7e000..a8b7fc28]

at java.net.SocketInputStream.socketRead0(Native Method)

at java.net.SocketInputStream.read(SocketInputStream.java:129)

at oracle.net.ns.Packet.receive(Unknown Source)

at oracle.net.ns.NetInputStream.getNextPacket(Unknown Source)

at oracle.net.ns.NetInputStream.read(Unknown Source)

at oracle.net.ns.NetInputStream.read(Unknown Source)

at oracle.net.ns.NetInputStream.read(Unknown Source)

at oracle.jdbc.ttc7.MAREngine.unmarshalUB1(MAREngine.java:718)

at oracle.jdbc.ttc7.MAREngine.unmarshalSB1(MAREngine.java:690)

at oracle.jdbc.ttc7.Oall7.receive(Oall7.java:373)

at oracle.jdbc.ttc7.TTC7Protocol.doOall7(TTC7Protocol.java:1405)

at oracle.jdbc.ttc7.TTC7Protocol.fetch(TTC7Protocol.java:889)

- locked <0xc7660c90> (a oracle.jdbc.ttc7.TTC7Protocol)

at oracle.jdbc.driver.OracleResultSetImpl.next(OracleResultSetImpl.java:242)

- locked <0xbaaa51d0> (a oracle.jdbc.driver.OracleResultSetImpl)

at weblogic.jdbc.wrapper.ResultSet_oracle_jdbc_driver_OracleResultSetImpl.next(Unknown Source)

at oracle.toplink.internal.databaseaccess.DatabaseAccessor.executeCall(Unknown Source)

at oracle.toplink.threetier.ServerSession.executeCall(Unknown Source)

at oracle.toplink.internal.queryframework.CallQueryMechanism.executeCall(Unknown Source)

at oracle.toplink.internal.queryframework.CallQueryMechanism.executeCall(Unknown Source)

at oracle.toplink.internal.queryframework.CallQueryMechanism.executeSelectCall(Unknown Source)

at oracle.toplink.internal.queryframework.CallQueryMechanism.selectAllRows(Unknown Source)

at oracle.toplink.internal.queryframework.ExpressionQueryMechanism.selectAllRowsFromTable(Unknown Source)

at oracle.toplink.internal.queryframework.ExpressionQueryMechanism.selectAllRows(Unknown Source)

at oracle.toplink.queryframework.ReadAllQuery.execute(Unknown Source)

…………………………………………………………………………………………………………………………………………………………………………………………………

As you can see in the above Thread Dump data snippet, most of the time was spent by our application / Toplink trying to fetch the data while consuming / iterating through the ResultSet object.

The Oracle JDBC Driver is configured with a default row pre fetch of 10 (If more than 10 rows exist in the result set of an SQL query, the driver will iteratively return 10 rows at a time up to the total of the result set. The fetch size is the amount of rows the driver is keeping in its cache during a transaction).

It was found that a lot of our SQL was returning about 100 up to 2000 data rows per execution so we then approximately calculated the network latency impact based on the default JDBC driver fetch settings:

· Formula: Query Response Time = Query Execution Time + (network latency * (#rows returned / defaultRowPrefetch))

· The SQL used as for our problem reproduction was returning 1500 data rows with an Oracle SQL execution of only 100ms:

· Total Query response time (WL cluster #2) = 100ms + (50ms * (1500 / 10)) = 7600 ms

· Total Query response time (WL cluster #1) = 100ms + (0.3ms * (1500 / 10)) = 145 ms

As you can see in the above results, the difference of response time was drastic between each regional Weblogic cluster and completely dependent on the network latency. A combination of higher response time from region #2 to region #1 and low default fetch value was identified as the root cause of the problem.

Solution

Since network latency could not be improved in our situation, the next logical solution was to attempt to increase the default Oracle JDBC fetch to a higher value (500 in our case) in order to limit the number of back and forward to the database to a minimum level. This was achieved by adding a Property in the Weblogic Connection Pool settings:

· Find below the SQL response time after increasing defaultRowPrefetch to 500:

· Total Query response time (WL cluster #2) = 100ms + (50ms * (1500 / 500)) = 250 ms

· Total Query response time (WL cluster #1) = 100ms + (0.3ms * (1500 / 500)) = 100 ms

As you can see, the above results were quite conclusive and we were able to reduce the impact of the Network latency to a minimum level by increasing the Oracle JDBC driver defaultRowPrefetch.

Recommendations

I hope this article has helped you understand the analysis approach and how this simple tuning can have such a positive impact on your SQL response time. Fine below my general recommendations regarding defaultRowPrefetch tuning:

- When facing abnormal slowdown of your SQL response time, please review your network latency and # of rows returned from your application. You may benefit from increasing the default Oracle JDBC driver defaultRowPrefetch to a higher value if your SQL queries is returning 50 rows+

- Be careful and do not increase the defaultRowPrefetch too high. Please keep in mind the such data is stored in the JDBC driver memory buffer cache so always do proper analysis, capacity planning and assess any negative impact on your Java memory following any defaultRowPrefetch increase